Augmented Reality Storytelling

For the final project in my Mobile Augmented Reality (AR) Studio course at NYU Integrated Design & Media, I used AR as a narrative tool to explore a future where intrusive ads and subscription plans rule our daily lives. Here’s the finished product:

Skills Gained

Lens Studio (World Object Controller + Segmentation)

Adobe After Effects

AR enabled storytelling

Process

I used Lens Studio by Snapchat to create the AR filters, Adobe Photoshop, Illustrator, and After Effects to make the assets, and Final Cut Pro + Logic Pro for audio and video editing. Here’s a summary video explaining my process:

Reviewing past work

A theme throughout my AR creations is social issues and activism.

Voter suppression

My first filter used BlippAR to highlight the struggle that BIPOC activists experienced during the 2020 US Presidential Elections. In a time when people were protesting the current government within the means of the voting system, Trump and his party showed that they could “stomp” out the rebellion and have seemingly full control of the voting process, by politicizing and controlling the USPS.

Humor in storytelling

Finally, I revisited a geolocation based AR narrative I made to describe what it is like to struggle with Attention Deficit Hyperactivity Disorder (ADHD). I enjoyed using humor to explore heavy topics like mental health, and seeing my classmates laughing up a storm!

Work culture

Next, I looked at the Zombie filter I made for Halloween, and displayed at our school exhibition. This project exposed the work, eat, sleep, die culture that we’ve established in Western capitalistic societies, using a Snapchat filter. Immigrant, BIPOC, and working class communities are conditioned to chase wealth and aspire to the American dream. The reality is that they’ll have to work unfair hours, earn minuscule wages, and be burdened by loans and debt, leaving no room for a fulfilling life.

Establishing a theme

There’s nothing more irritating than a popup banner on a news article that just won’t go away, or a 30 second ad before a YouTube video that takes forever to finish.

Of course, you can purchase a premium subscription to make those ads go away, and unlock shiny new features. This approach is applied everywhere from digital newspaper subscriptions, all the way to automobiles.

Tesla has disrupted the automotive market, making premium features like Autopilot and navigation traffic visualization available after the initial purchase via a subscription plan.

So many core features now require a monthly payment! This got me thinking…

What if our lives had a premium subscription?

What would the base plan look like?

How would our mental health be affected?

Starting somewhere

I began thinking about stories to explore in AR. From the day I applied to NYU, I knew I wanted to develop an in car heads up display (HUD). I imagined a future self driving electric car with a windshield that served as in car entertainment, but also highlighted important information. Here’s some inspiration:

Look and feel

Microsoft Windows 95, Avatar, and Waze served as inspiration. I wanted a retro y2k appearance, with pixelated lettering, basic graphics, and ugly looking ads.

The Windows 95 aesthetic is making a comeback, with it's nostalgia provoking sound effects and pixelated interface

The holographic interface from Avatar uses blue translucent panes, with colorful illustrations

Hyper Reality by Keiichi Matsuda shows how the world could become littered with ads, popups, and augmentations.

I often get frustrated when using Waze, since popup ads distract me from the navigation, and further clutter the interface.

Initial storyline + assets

As the due date got closer, I found myself getting overwhelmed with no place to start. To solve this, I established an initial narrative around the HUD in which the driver is greeted with a dashboard of today’s events, navigation, settings, and entertainment. Then a popup would tell them that the premium subscription trial has expired. They are reverted to the base UI, and see popup ads.

In Adobe Photoshop and Illustrator, I made the premium and base interfaces, with ugly popup ads.

Making the first HUD filter

Using Lens Studio by Snapchat, I created an AR filter that introduces a new screen each time the person filming taps the screen, using a function called “World Object Controller.” I plugged in a piece of JavaScript that I wrote to tell the object controller to make a new UI pane appear on top of a previous pane with each tap. The object controller also tracks objects in 3D space. A user can place an object on a plane and it will stick there even if the user moves the camera around.

Once I had this working, I tested the filter out and adjusted sizing etc.

First test of the World Object Controller HUD filter. The UI successfully stayed in place atop the Model 3’s dashboard.

Revolving ads filter

One day, I drove over to Hoboken to see my friend Christopher Strawley. He had this amazing idea of having advertisements start in the car but follow me around. This changed the trajectory of the storyline, and I was here for it!

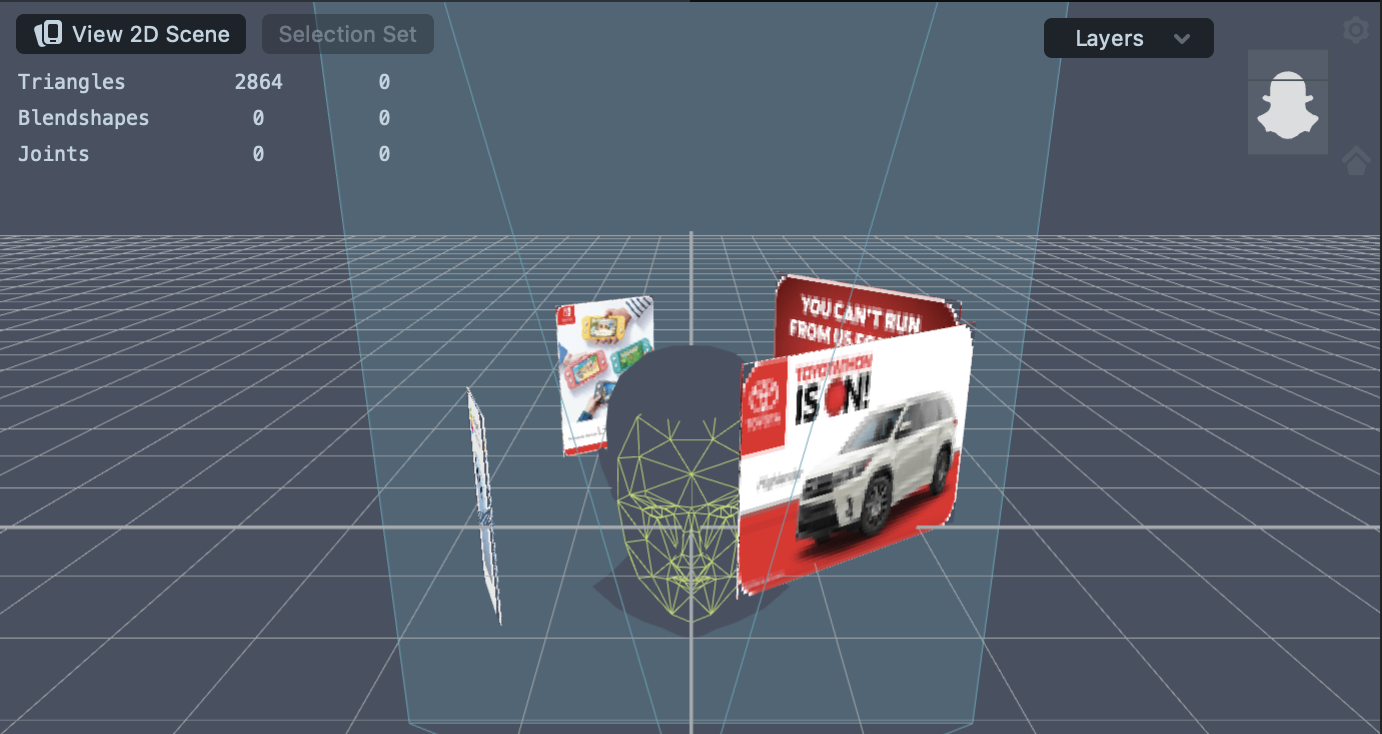

I pixelated a bunch of ads I found online to align with the late 90s aesthetic. Then, Christopher made a head binding model in Autodesk Maya and animated panes circling the head 360 degrees. I moved the model into Lens Studio and placed the ads on the revolving panes.

Animation from Maya brought into Lens Studio, with pixelated ads attached as plains.

Using a face occluder placed on the head binding, I was able to cut out the head from the animation, so the ads would go behind my head, as if they were obeying the laws of the physical world.

The ads cut out behind my head.

Clothing ad filter

I had a lot of fun with this filter! First, I took videos of myself in several outfits and turned them into transparent GIFs. Then, I pulled them into Lens Studio in a World Object Controller and added some text and a logo. Using a Particle Generator, I made snow to create a festive holiday sale theme.

Deepop ad filter

Sky dome filter

This filter served as a culmination of all the ads. I wanted it to feel overwhelming and intrusive, like it was taking over reality. I pixelated a bunch of ads in Photoshop and positioned them in Lens Studio on 2D planes. I tilted them and reshaped them to form a dome above the viewer.

Ads arranged in a hemisphere in Lens Studio

To have these ads replace the sky, I used the Sky Segmentation feature of Lens Studio to cut out the sky and use my images:

Shadow Government filter

The grand finale required two filters to execute: a lizard face filter, and sky segmentation (like the previous filter). First, I drew a lizard eyeball in Procreate on my iPad and found a lizard skin texture

Ads arranged in a hemisphere in Lens Studio

Then, I made a new Lens Studio project and added an Eyeball object into the scene. This allowed me to retexture the viewer’s eyeball! I replaced the sclera texture with the one I drew:

Finally, I added a face occluder to add a texture to the face skin. Here, I placed the lizard skin texture.

Finally, I threw on my raggedy old wig and used the filter to record some creepy footage of the shadow government president. I imported this footage into Adobe After Effects and used the Rotobrush tool to remove the white background and place the Oval Office instead:

I exported the video and used it to texture the inside of a hemisphere object in Lens Studio. I used this as a sky dome, then used Sky Segmentation to replace the sky with the video hemisphere.

Narrative Themes

My starting approach was to storyboard the entire narrative then create the AR experiences, but that became overwhelming.

I went with an inverse approach of creative interesting AR filters, then carving the story out around them. This gave me more time to explore the effects of AR and subscription services on our mental wellbeing.

By the end of the process, I showed that future immersive technology could be

-

Users will grow accustomed to premium features and may find the base feature package disappointing. Core fixtures of our lives may become subscriptions, creating an overwhelming amount of bills to pay.

-

Imagine those pesky popup ads following you from your web browser into reality. This could get extremely irritating and lead to sensory overload. Tailored ads specific to your location could litter your surroundings, increasing feelings of anxiety and creating an unpleasant environment.

-

How would you feel if you could see things that your peers could not? This experience could be incredibly isolating, and cause people to write you off. Your trauma may be trivialized or glossed over. I could see this happening more often as AR glasses become more common.

Challenges

I’ve always made videos for fun since I was a kid, but this was the first time I had to do it for a graded assignment, in a set amount of time. I found that giving myself time to iterate on my narrative, rather than stick to one story was the best approach. It was tough more me to manage my time initially, so I decided to just dive in and start making filters.

The more AR experiences I created, the better I could weave a narrative to connect each experience. Overall, I learned to just put myself out there and start creating. It is easier for my brain to narrow down the options from many than to start from scratch and plan everything perfectly.

Future Improvements

I kept a close eye on timing and ensuring that the story doesn’t get dragged out too long, but I still think I could use more experience crafting strategically timed narratives. I want to improve my comedic timing, and story planning abilities.

On the AR side, I want to learn how to do more with Lens Studio, as I’ve just scratched the surface. I’d like to use LIDAR based room scanning technology to make objects feel even more embedded in reality. I also want to grow more comfortable creating and using 3D objects.